Today I’ll show you how to stop wrestling with Kamal 2 and spend your time more productively. I spent several days configuring Kamal and… I failed spectacularly. I’ve been using Rails for over 15 years, and yet I felt completely stupid being stuck with this piece of… art (yes, definitely art!). Thanks to my friend and colleague Igor Aleksandrov, who helped tremendously and pointed out several issues with my configuration, I decided to write this guide to save you from making the same mistakes I did.

#Table of Contents

#Demo App

My configuration:

- Rails 8 application

- The Solid Stack trifecta - Solid Cache/Cable/Queue

- Kamal + Thruster for deployment

- Shrine for file uploads

- Extra environment (staging) for deployment

Let’s start from scratch. First, I’ll create a demo application that we’ll deploy:

rails new super_duper_app -d sqlite3

cd super_duper_app

bundle install

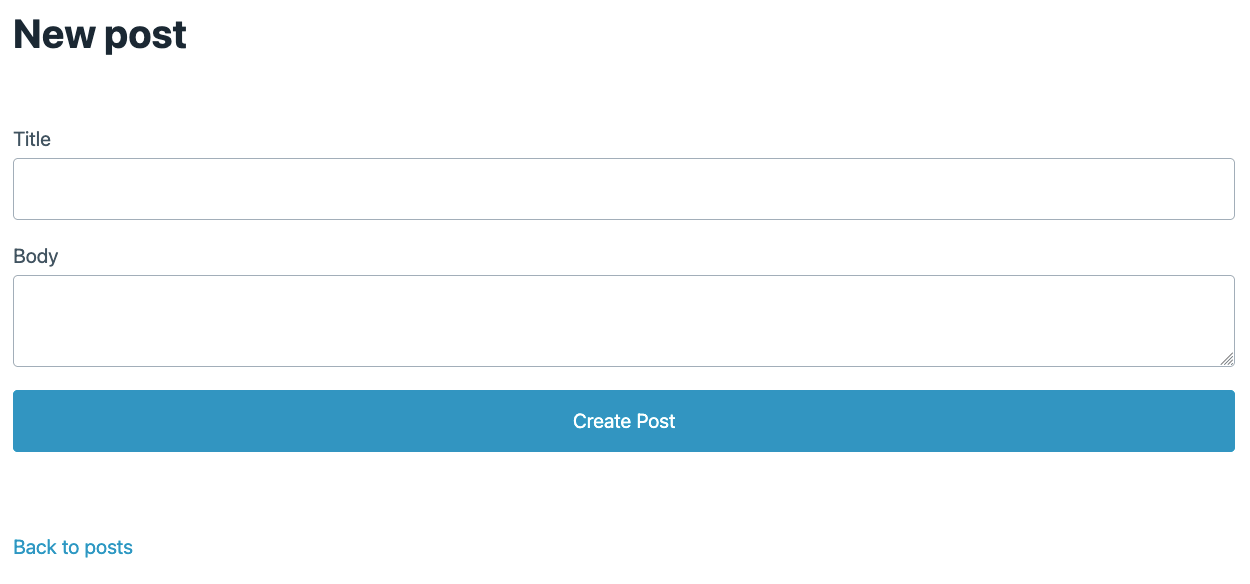

rails g scaffold Post title:string body:textAlmost done. Let’s make posts#index the root route and improve the views using libraries I described earlier to make the default views look less ugly.

#Server Preparation

Let’s prepare our server. You’ll need to create an account on DigitalOcean, Linode, AWS, Servers.com, or any other similar hosting platform. I recommend creating a separate user for deployment; you can name this account kamal, deploy, or even tonystark - whatever suits your fancy. You’ll also need to update packages and secure your server, but I’ll skip those topics in this post. Let’s update the default config and try to deploy our app.

#Default deploy.yml

service: super_duper_app # <-- the name of your app

image: apoimtsev/super_duper_app # <-- account and image name on hub.docker.com

servers:

web:

- 191.168.1.1 # <-- server ip

proxy:

ssl: true

host: super-duper.com # <-- hostname

registry:

username: apoimtsev # <-- registry username

password:

- KAMAL_REGISTRY_PASSWORD

env:

secret:

- RAILS_MASTER_KEY

clear:

SOLID_QUEUE_IN_PUMA: true

volumes:

- 'super_duper_app_storage:/rails/storage' # <-- volume name

asset_path: /rails/public/assets

builder:

arch: amd64

args:

RUBY_VERSION: <%= File.read(".ruby-version")[/(.*)/, 1] %>

RAILS_MASTER_KEY: <%= File.read("config/master.key")[/(.*)/, 1] %>

ssh:

user: deploy # <-- user on the server

aliases:

console: app exec --interactive --reuse "bin/rails console"

shell: app exec --interactive --reuse "bash"

logs: app logs -f

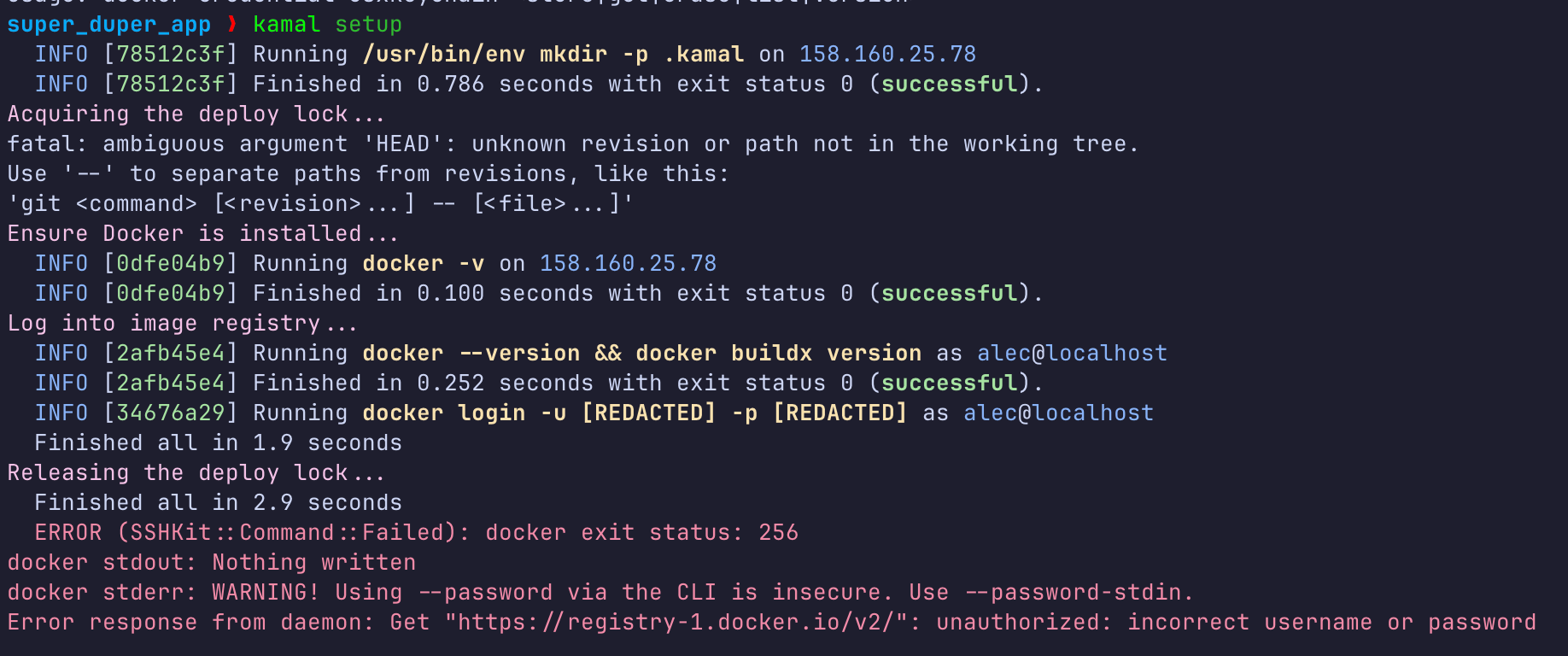

dbc: app exec --interactive --reuse "bin/rails dbconsole"When you run kamal setup, you’ll see something like this:

Something went wrong… Look Ma’ - I forgot to submit KAMAL_REGISTRY_PASSWORD! Ok, let’s fix it. First, we’ll create a .env file with our key (you can get a new one here):

KAMAL_REGISTRY_PASSWORD="your_secret_pass"Next, let’s install dotenvx or a similar tool to read and set environment variables from the .env file. Then let’s add this line to .kamal/secrets:

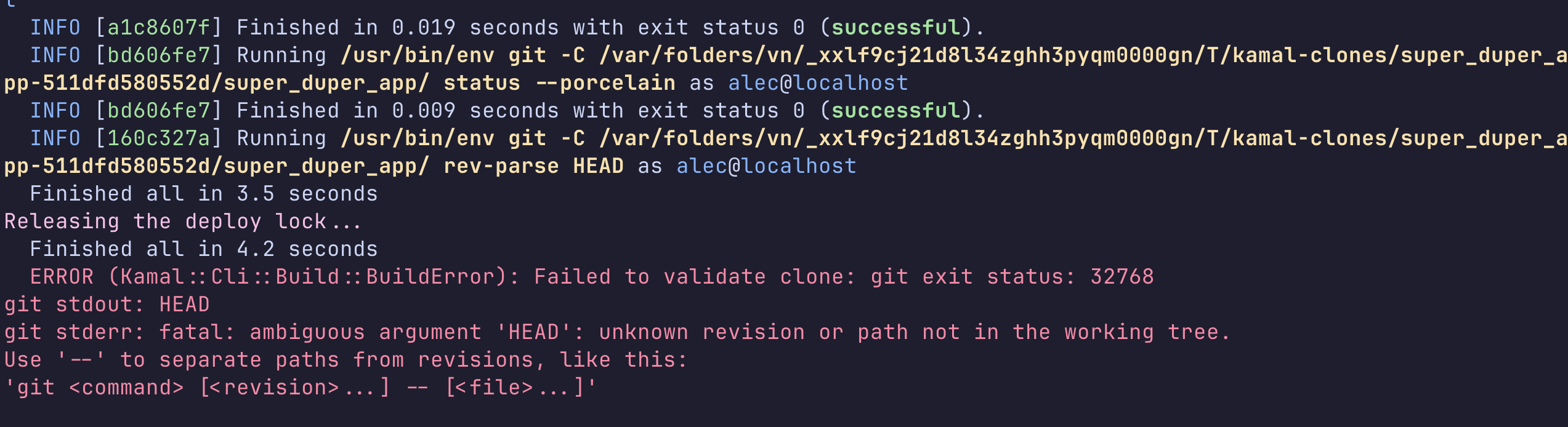

KAMAL_REGISTRY_PASSWORD=$(dotenvx get KAMAL_REGISTRY_PASSWORD --quiet -f .env)Then run kamal setup again… Looks better, but what the… oops, I forgot to git commit…

…once again:

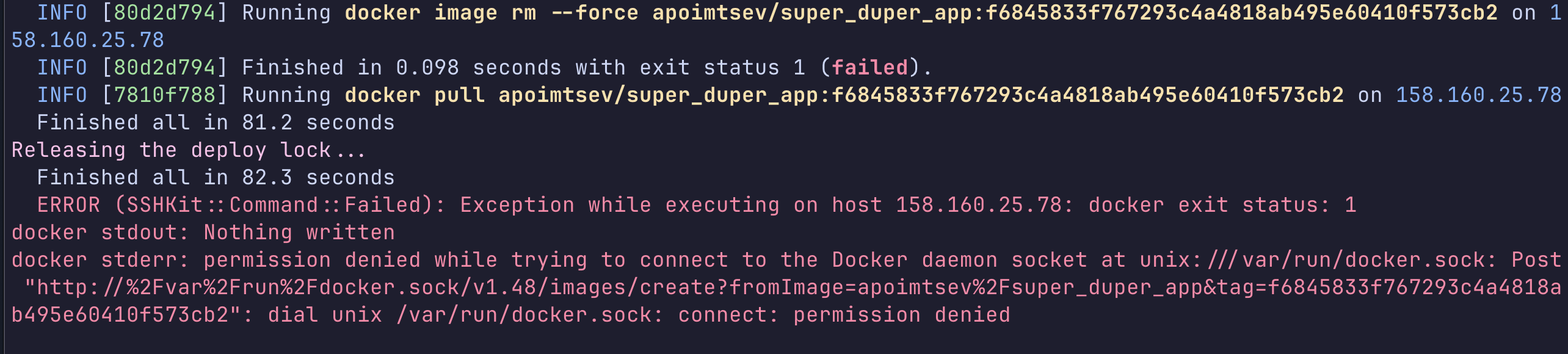

Another error - we forgot to add our user deploy to the docker group. You can fix this with sudo usermod -aG docker $USER from the server console.

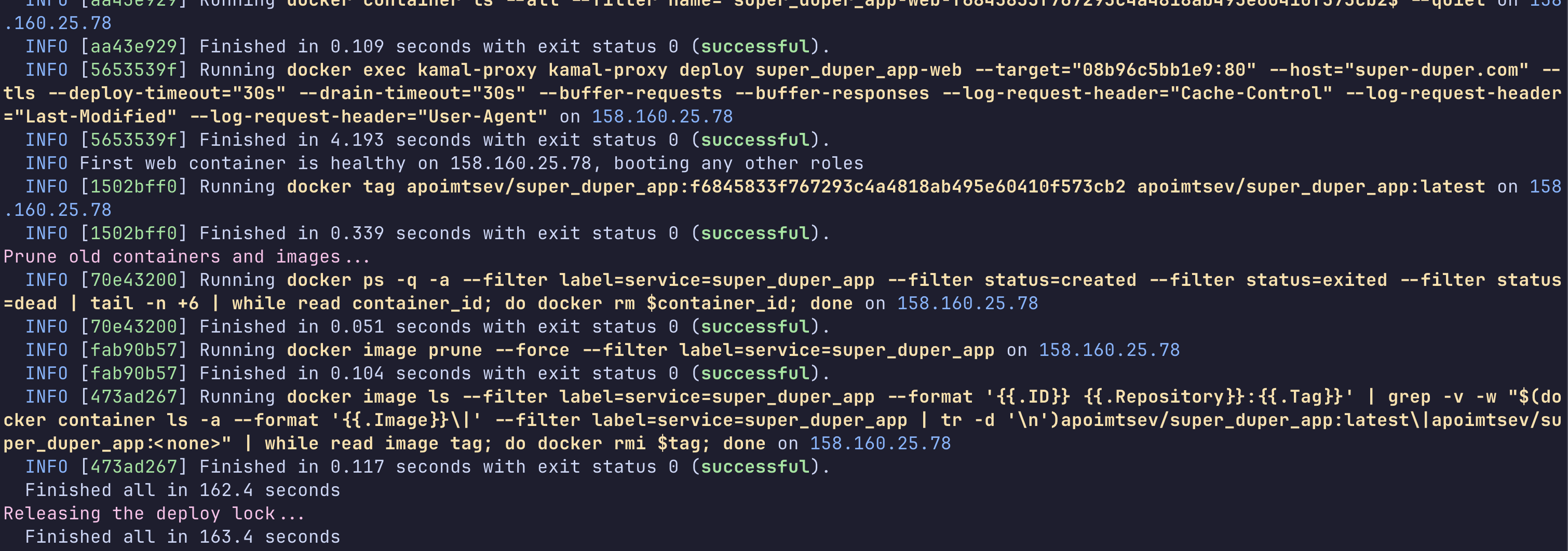

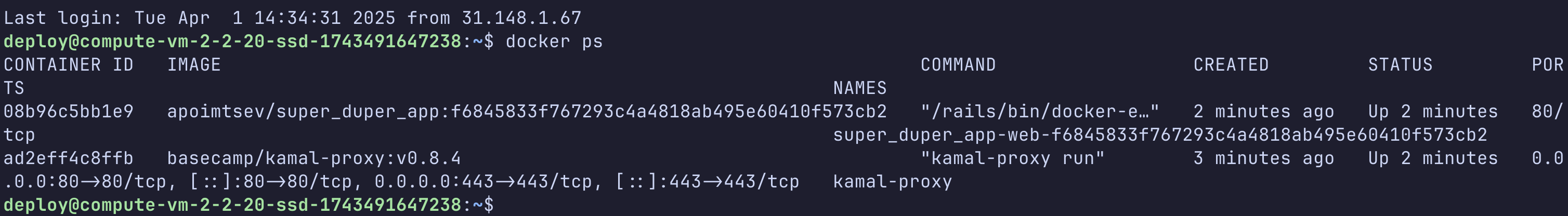

Looking good! Let’s check our server - login and run docker ps:

Our app is up and running, but our adventure isn’t over yet!

Let me show you some useful hacks to improve your setup.

#Database Configuration

If you’d like to use PostgreSQL or another database, you’ll need to update your deploy.yml file and add the following lines:

+accessories:

+ postgres:

+ image: postgres:17

+ roles:

+ - web

+ port: 5432

+ directories:

+ - data:/var/lib/postgresql/data

+ files:

+ - db/init.sql:/docker-entrypoint-initdb.d/setup.sql

+ env:

+ secret:

+ - POSTGRES_DB

+ - POSTGRES_PORT

+ - POSTGRES_HOST

+ - POSTGRES_USER

+ - POSTGRES_PASSWORDOf course, you’ll need to set up and add the ability to read secrets from the .env file. Update .kamal/secrets with the following:

# Secrets defined here are available for reference under registry/password, env/secret, builder/secrets,

# and accessories/*/env/secret in config/deploy.yml. All secrets should be pulled from either

# password manager, ENV, or a file. DO NOT ENTER RAW CREDENTIALS HERE! This file needs to be safe for git.

# Example of extracting secrets from 1password (or another compatible pw manager)

# SECRETS=$(kamal secrets fetch --adapter 1password --account your-account --from Vault/Item KAMAL_REGISTRY_PASSWORD RAILS_MASTER_KEY)

# KAMAL_REGISTRY_PASSWORD=$(kamal secrets extract KAMAL_REGISTRY_PASSWORD ${SECRETS})

# RAILS_MASTER_KEY=$(kamal secrets extract RAILS_MASTER_KEY ${SECRETS})

# Use a GITHUB_TOKEN if private repositories are needed for the image

# GITHUB_TOKEN=$(gh config get -h github.com oauth_token)

# Grab the registry password from ENV

# KAMAL_REGISTRY_PASSWORD=$KAMAL_REGISTRY_PASSWORD

# Improve security by using a password manager. Never check config/master.key into git!

RAILS_MASTER_KEY=$(cat config/master.key)

+KAMAL_REGISTRY_PASSWORD=$(dotenvx get KAMAL_REGISTRY_PASSWORD --quiet -f .env)

+POSTGRES_DB=$(dotenvx get POSTGRES_DB --quiet -f .env)

+POSTGRES_USER=$(dotenvx get POSTGRES_USER --quiet -f .env)

+POSTGRES_PASSWORD=$(dotenvx get POSTGRES_PASSWORD --quiet -f .env)

+POSTGRES_PORT=$(dotenvx get POSTGRES_PORT --quiet -f .env)And configure database.yml:

default: &default

adapter: postgresql

encoding: unicode

pool: <%= ENV.fetch("RAILS_MAX_THREADS") { 5 } %>

development:

primary: &primary_development

<<: *default

database: super_duper_development

cache:

<<: *primary_development

database: super_duper_development_cache

migrations_paths: db/cache_migrate

queue:

<<: *primary_development

database: super_duper_development_queue

migrations_paths: db/queue_migrate

cable:

<<: *primary_development

database: super_duper_development_cable

migrations_paths: db/cable_migrate

test:

<<: *default

database: super_duper_test

+staging:

+ primary: &primary_staging

+ <<: *default

+ database: <%= ENV['POSTGRES_DB'] %>

+ username: <%= ENV['POSTGRES_USER'] %>

+ password: <%= ENV['POSTGRES_PASSWORD'] %>

+ host: <%= ENV['POSTGRES_HOST'] %>

+ port: <%= ENV['POSTGRES_PORT'] %>

+ cache:

+ <<: *primary_staging

+ database: super_duper_staging_cache

+ migrations_paths: db/cache_migrate

+ queue:

+ <<: *primary_staging

+ database: super_duper_staging_queue

+ migrations_paths: db/queue_migrate

+ cable:

+ <<: *primary_staging

+ database: super_duper_staging_cable

+ migrations_paths: db/cable_migrate

production:

primary: &primary_production

<<: *default

database: <%= ENV['POSTGRES_DB'] %>

username: <%= ENV['POSTGRES_USER'] %>

password: <%= ENV['POSTGRES_PASSWORD'] %>

host: <%= ENV['POSTGRES_HOST'] %>

port: <%= ENV['POSTGRES_PORT'] %>

cache:

<<: *primary_production

database: super_duper_cache

migrations_paths: db/cache_migrate

queue:

<<: *primary_production

database: super_duper_queue

migrations_paths: db/queue_migrate

cable:

<<: *primary_production

database: super_duper_cable

migrations_paths: db/cable_migrateOops, spoiler alert - I’ll show you how to configure the Solid Stack and staging environment below.

One more important thing - the db/init.sql file, which is required to set up the postgres container:

CREATE USER $POSTGRES_USER WITH PASSWORD '$POSTGRES_PASSWORD';

CREATE DATABASE $POSTGRES_DB OWNER $POSTGRES_USER;Finally, if you don’t know where to get the POSTGRES_HOST value - just merge the service name from the service section with _postgres. In our case, it will be super_duper_app_postgres.

Please note that if you’d like to use RDS-like platforms, you don’t need to add the accessories\postgres section to your config - all you need is to add the database URL to your config file like in the example below:

production:

url: <%= ENV["MY_APP_DATABASE_URL"] %>#Multi-Stage Environment

If you’re a professional developer, not just a “slap-shit-together-and-deploy” developer, you may need to configure a staging environment. Let’s make some changes to our configs.

First, create a deploy.staging.yml file and move some sections from deploy.yml as shown below:

deploy.yml

service: super_duper_app

image: apoimtsev/super_duper_app

-servers:

- web:

- - 191.168.1.1 # <-- server ip

-

-proxy:

- ssl: true

- host: super-duper.com # <-- hostname

registry:

username: apoimtsev

password:

- KAMAL_REGISTRY_PASSWORD

-env:

- secret:

- - RAILS_MASTER_KEY

- clear:

- SOLID_QUEUE_IN_PUMA: true

-

-volumes:

- - "super_duper_app_storage:/rails/storage" # <-- volume name

builder:

args:

RUBY_VERSION: <%= File.read(".ruby-version")[/(.*)/, 1] %>

RAILS_MASTER_KEY: <%= File.read("config/master.key")[/(.*)/, 1] %>

arch: amd64

secrets:

- RAILS_MASTER_KEY

-ssh:

- user: deploy

aliases:

console: app exec --interactive --reuse "bin/rails console"

shell: app exec --interactive --reuse "bash"

logs: app logs -f

dbc: app exec --interactive --reuse "bin/rails dbconsole"deploy.staging.yml

<% require "dotenv"; Dotenv.load(".env.staging") %>

env:

clear:

RAILS_ENV: staging

RAILS_LOG_TO_STDOUT: true

SOLID_QUEUE_IN_PUMA: true

secret:

- RAILS_MASTER_KEY

proxy:

ssl: true

host: <%= ENV['DOMAIN']%>

healthcheck:

interval: 3

path: /up

timeout: 3

servers:

web:

hosts:

- <%= ENV['DOMAIN']%>

volumes:

- "/root/uploads:/rails/public/uploads"

ssh:

user: deploy

accessories:

postgres:

image: postgres:17

roles:

- web

port: 5432

directories:

- data:/var/lib/postgresql/data

files:

- db/init.sql:/docker-entrypoint-initdb.d/setup.sql

env:

secret:

- POSTGRES_DB

- POSTGRES_PORT

- POSTGRES_USER

- POSTGRES_PASSWORDLet me point out one interesting thing:

<% require "dotenv"; Dotenv.load(".env.staging") %>This line allows you to use environment variables like <%= ENV['DOMAIN']%>, which can be useful when you need to use Ruby code in a YAML file. This change was added in Kamal 2, and I have no idea why the 37signals team did this.

I mentioned that I use the Solid Stack, and for a staging environment, you’ll need to update the config files:

config/cable.yml

development:

adapter: solid_cable

connects_to:

database:

writing: cable

polling_interval: 0.1.seconds

message_retention: 1.day

test:

adapter: test

production:

adapter: solid_cable

connects_to:

database:

writing: cable

polling_interval: 0.1.seconds

message_retention: 1.day

+staging:

+ adapter: solid_cable

+ connects_to:

+ database:

+ writing: cable

+ polling_interval: 0.1.seconds

+ message_retention: 1.dayconfig/cache.yml

default: &default

store_options:

# Cap age of oldest cache entry to fulfill retention policies

# max_age: <%= 60.days.to_i %>

max_size: <%= 256.megabytes %>

namespace: <%= Rails.env %>

development:

<<: *default

test:

<<: *default

production:

database: cache

<<: *default

+staging:

+ database: cache

+ <<: *defaultconfig/queue.yml

default: &default

dispatchers:

- polling_interval: 1

batch_size: 500

workers:

- queues: "*"

threads: 3

processes: <%= ENV.fetch("JOB_CONCURRENCY", 1) %>

polling_interval: 0.1

development:

<<: *default

test:

<<: *default

production:

<<: *default

+staging:

+ <<: *defaultLet’s go back to our deploy.staging.yml. I’d like to point out another important line, which sets SOLID_QUEUE_IN_PUMA to true. This variable allows you to control solid_queue through puma as described here.

#Local Uploads with Shrine Gem

Sometimes you may need to use local uploads instead of S3-compatible storage. Personally, I prefer the Shrine gem over others. Let’s add this gem to our application:

bundle add shrine

rails generate migration add_image_data_to_posts image_data:jsonb # if you use postgres

rails generate migration add_image_data_to_posts image_data:text # if you use sqlite3Next, we need to create an initializer config/initializers/shrine.rb:

require "shrine"

require "shrine/storage/file_system"

require "shrine/storage/memory"

require "shrine/storage/s3"

Shrine.plugin :activerecord

Shrine.plugin :derivatives

Shrine.plugin :determine_mime_type, analyzer: :marcel

Shrine.plugin :download_endpoint, prefix: "downloads"

Shrine.plugin :instrumentation, notifications: ActiveSupport::Notifications

Shrine.plugin :pretty_location

Shrine.plugin :remove_attachment

Shrine.plugin :restore_cached_data

Shrine.plugin :store_dimensions, analyzer: :fastimage

Shrine.plugin :validation

Shrine.plugin :validation_helpers

### Storages

s3_options = {

access_key_id: Rails.application.credentials.aws[:access_key_id],

secret_access_key: Rails.application.credentials.aws[:secret_access_key],

region: Rails.application.credentials.aws[:s3_region],

bucket: Rails.application.credentials.aws[:s3_bucket]

}

Shrine.storages =

if Rails.env.production?

{

cache: Shrine::Storage::S3.new(prefix: "cache", **s3_options),

store: Shrine::Storage::S3.new(prefix: "store", **s3_options)

}

elsif Rails.env.test?

{

cache: Shrine::Storage::Memory.new,

store: Shrine::Storage::Memory.new

}

else # development and staging

{

cache: Shrine::Storage::FileSystem.new("public", prefix: "uploads/cache"),

store: Shrine::Storage::FileSystem.new("public", prefix: "uploads")

}

endDon’t forget to add AWS-related credentials with bin/rails credentials:edit --environment staging:

aws:

access_key_id: '111'

secret_access_key: '222'

s3_region: '333'

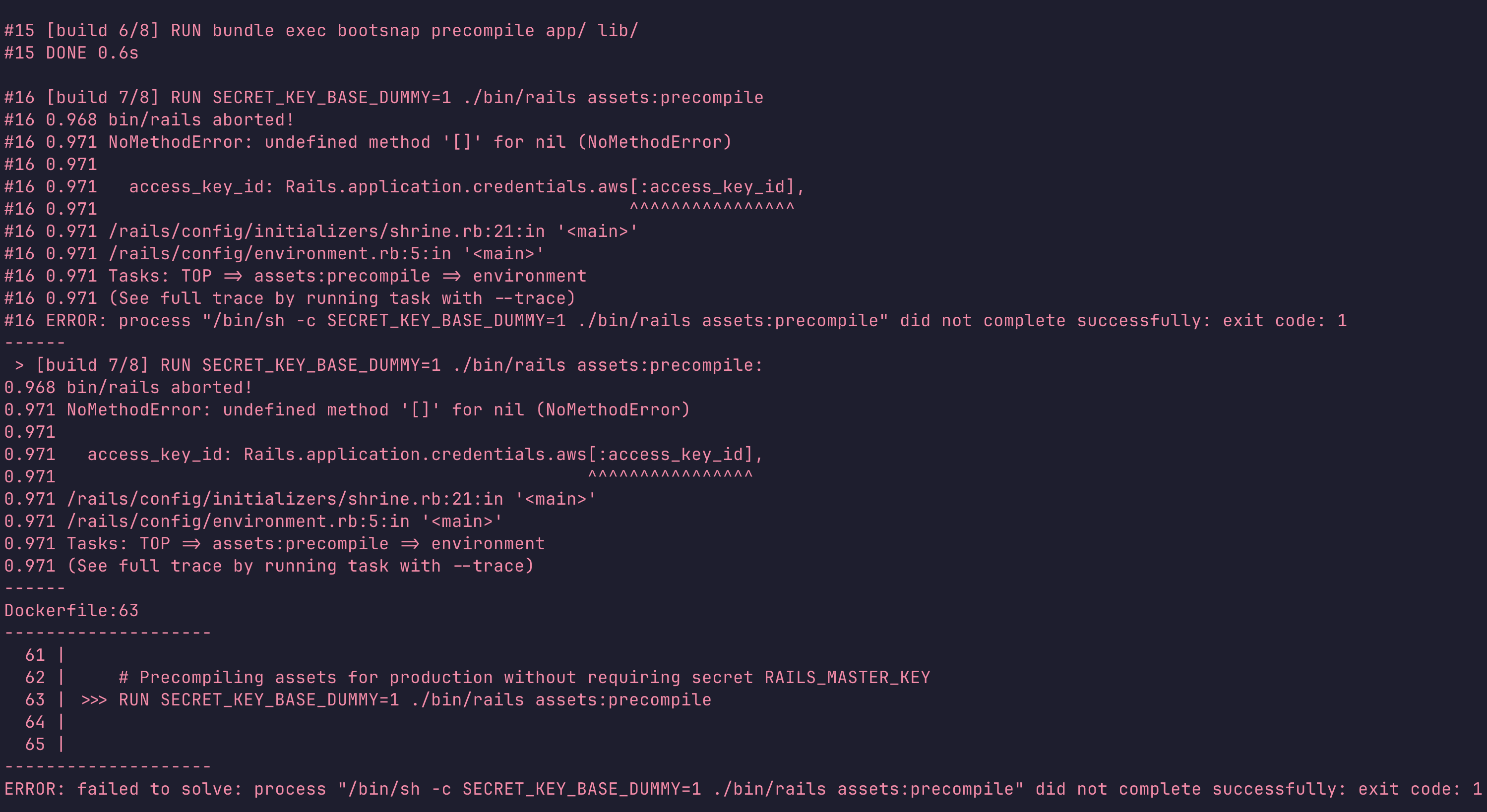

s3_bucket: '444'But if you run kamal deploy -d staging, you’ll see the following error:

The problem is with the s3_options Hash - Rails tries to build the hash, but Rails credentials in the build phase aren’t available because the build phase uses the production environment instead of staging.

Let’s rewrite some code in our initializer to fix it:

require "shrine"

require "shrine/storage/file_system"

require "shrine/storage/memory"

require "shrine/storage/s3"

Shrine.plugin :activerecord

Shrine.plugin :derivatives

Shrine.plugin :determine_mime_type, analyzer: :marcel

Shrine.plugin :download_endpoint, prefix: "downloads"

Shrine.plugin :instrumentation, notifications: ActiveSupport::Notifications

Shrine.plugin :pretty_location

Shrine.plugin :remove_attachment

Shrine.plugin :restore_cached_data

Shrine.plugin :store_dimensions, analyzer: :fastimage

Shrine.plugin :validation

Shrine.plugin :validation_helpers

### Storages

-s3_options = {

- access_key_id: Rails.application.credentials.aws[:access_key_id],

- secret_access_key: Rails.application.credentials.aws[:secret_access_key],

- region: Rails.application.credentials.aws[:s3_region],

- bucket: Rails.application.credentials.aws[:s3_bucket]

-}

-

-Shrine.storages =

- if Rails.env.production?

- {

- cache: Shrine::Storage::S3.new(prefix: "cache", **s3_options),

- store: Shrine::Storage::S3.new(prefix: "store", **s3_options)

- }

- elsif Rails.env.test?

- {

- cache: Shrine::Storage::Memory.new,

- store: Shrine::Storage::Memory.new

- }

- else # development and staging

- {

- cache: Shrine::Storage::FileSystem.new("public", prefix: "uploads/cache"),

- store: Shrine::Storage::FileSystem.new("public", prefix: "uploads")

- }

- end

+Shrine.storages = case

+when ENV["SECRET_KEY_BASE_DUMMY"]

+ {

+ cache: Shrine::Storage::Memory.new,

+ store: Shrine::Storage::Memory.new

+ }

+when Rails.env.test?

+ {

+ cache: Shrine::Storage::Memory.new,

+ store: Shrine::Storage::Memory.new

+ }

+when Rails.env.production?

+ s3_options = {

+ access_key_id: Rails.application.credentials.aws[:access_key_id],

+ secret_access_key: Rails.application.credentials.aws[:secret_access_key],

+ region: Rails.application.credentials.aws[:s3_region],

+ bucket: Rails.application.credentials.aws[:s3_bucket]

+ }

+ {

+ cache: Shrine::Storage::S3.new(prefix: "cache", **s3_options),

+ store: Shrine::Storage::S3.new(prefix: "store", **s3_options)

+ }

+else # development and staging

+ {

+ cache: Shrine::Storage::FileSystem.new("public", prefix: "uploads/cache"),

+ store: Shrine::Storage::FileSystem.new("public", prefix: "uploads")

+ }

+endCreate an uploads folder on the server and run chown 1000:1000 uploads. Next, we need to fine-tune our Dockerfile. Scroll down to the bottom:

# Final stage for app image

FROM base

+RUN groupadd --system --gid 1000 rails && \

+ useradd rails --uid 1000 --gid 1000 --create-home --shell /bin/bash

+USER 1000:1000

# Copy built artifacts: gems, application

COPY --from=build "${BUNDLE_PATH}" "${BUNDLE_PATH}"

COPY --from=build /rails /rails

# Run and own only the runtime files as a non-root user for security

-RUN groupadd --system --gid 1000 rails && \

- useradd rails --uid 1000 --gid 1000 --create-home --shell /bin/bash && \

- chown -R rails:rails db log storage tmp

-USER 1000:1000

# Entrypoint prepares the database.

ENTRYPOINT ["/rails/bin/docker-entrypoint"]

# Start server via Thruster by default, this can be overwritten at runtime

EXPOSE 80

CMD ["./bin/thrust", "./bin/rails", "server"]#Conclusion (Step-by-Step Guide)

To summarize all the steps mentioned above:

- Create an app

- Create the main

deploy.ymlwith the following content:

service: super_duper_app

image: apoimtsev/super_duper_app

registry:

username: apoimtsev

password:

- KAMAL_REGISTRY_PASSWORD

builder:

args:

RUBY_VERSION: <%= File.read(".ruby-version")[/(.*)/, 1] %>

RAILS_MASTER_KEY: <%= File.read("config/master.key")[/(.*)/, 1] %>

arch: amd64

secrets:

- RAILS_MASTER_KEY

aliases:

console: app exec --interactive --reuse "bin/rails console"

shell: app exec --interactive --reuse "bash"

logs: app logs -f

dbc: app exec --interactive --reuse "bin/rails dbconsole"- Create

deploy.staging.ymlanddeploy.production.ymlwith the following content:

<% require "dotenv"; Dotenv.load(".env.staging") %>

env:

clear:

RAILS_ENV: staging

RAILS_LOG_TO_STDOUT: true

SOLID_QUEUE_IN_PUMA: true

secret:

- RAILS_MASTER_KEY

proxy:

ssl: true

host: <%= ENV['DOMAIN']%>

healthcheck:

interval: 3

path: /up

timeout: 3

servers:

web:

hosts:

- <%= ENV['DOMAIN']%>

volumes:

- "/root/uploads:/rails/public/uploads"

ssh:

user: deploy

accessories:

postgres:

image: postgres:17

roles:

- web

port: 5432

directories:

- data:/var/lib/postgresql/data

files:

- db/init.sql:/docker-entrypoint-initdb.d/setup.sql

env:

secret:

- POSTGRES_DB

- POSTGRES_PORT

- POSTGRES_USER

- POSTGRES_PASSWORD- Create

.env.stagingand.env.productionwith all required environment variables:

KAMAL_REGISTRY_PASSWORD=""

DOMAIN="staging.super-duper.com"

POSTGRES_USER='db_user'

POSTGRES_PASSWORD='superpass'

POSTGRES_DB='super_duper_staging'

POSTGRES_PORT='5432'- Update

config/cable.yml,config/cache.yml, andconfig/queue.yml- add staging environment - Update

database.ymlto add staging environment - Update

.kamal/secretsto set environment variables, copy this file to.kamal/secrets.staging, and change.envto.env.staging - Create

uploadsfolder on the server (unless you use S3-compatible storage) and runchown 1000:1000 uploads - Update the default

Dockerfile